Having moved over entirely to Linux, I must have missed that my audio recording drive (7200rpm) is still formatted to NTFS and not EXT4 like my other drives. Would switching over make a dramatic difference to write speeds for Ardour? Ideally I’d like to avoid re-formatting because I’d be needing to shift a lot of data but if the performance gains would be significant I’d take the time to do so.

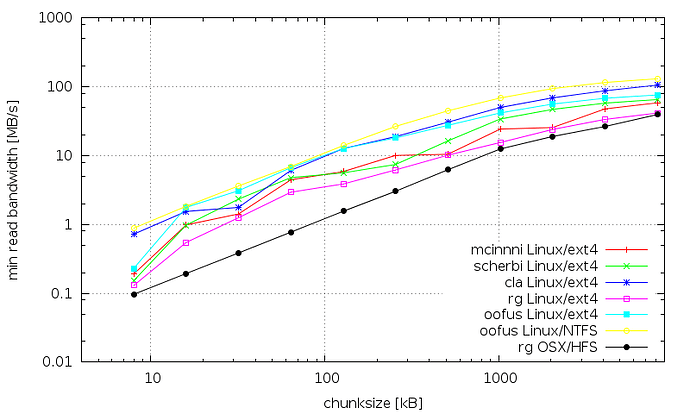

NTFS may actually be marginally better! Compare the yellow and turquoise line:

Back in 2015 we measured read/write performance to determine the optimal read-chunk size for audio disk-streaming from HDD spinning disks. 7 volunteers with different systems. The full data set is available at https://gareus.org/wiki/hddspeed

If you want to check for yourself, the test tools are in Ardour’s source-tree: tools/run-*test.sh and tools/*test*cc (first line of each source shows how to compile it).

PS. the current record (held by oofus) was to record 1020 tracks concurrently on a 7200 spinning HDD (32bit float @ 48KHz – IIRC Ardour on GNU/Linux to a NTFS partition) – SSDs can easily do more.

As for NTFS vs ext4. I expect the ext-fs is safer in case there’s a power-outage or a system crash. I don’t know which mount-options were used, but I assume ext4 is a tad slower since it keeps a journal and more meta-data.

Other considerations are fragmentation (NTFS only) if the disk fills up or if you regularly delete files…

Blow me down with a feather. Thanks so much for the detailed response.

This is my impression and part of why I don’t use it unless I have to (Which isn’t often anymore thanks to exFAT). I am pretty sure when I used it more I had problems with having to rebuild it on occasion in Windows, and OS X support in particular could be hit and miss on occasion. So safety can’t be overstated really.

For general use you can read and write to it, but if something goes wrong I am not sure you can recover as easily from it.

SeabladeI think XFS might be the filesystem with the best overall performance today. It is also the default for Red Hat / CentOS distros.

Here is a recent performance comparison with ext4 and a couple of other filesystems:

https://www.phoronix.com/scan.php?page=article&item=linux-58-filesystems&num=1

Quote:

When taking the geometric mean of all the test results, XFS was the fastest while F2FS delivered 95% the performance of XFS for this modern flash-optimized file-system. Btrfs came in a distant third place finish for performance from this single NVMe SSD drive benchmark followed by EXT4 and then NILFS2.

I’ve been using XFS a couple of years on all my data drives (SSD and magnetic). It performs very well and the experience has been totally problem free.

Keep in mind that for an average session, Ardour reads 256kB chunks of 100 files sequentially. The I/O scheduler and disk readahead plays a much more significant role than the file-system.

There has been a lot of work going in optimizing both ext4 and xfs since 2015, maybe it’s time for another benchmark

@x42 I would be very interested in doing a performance test like you did back in 2015. I’d like to test ext4 against xfs on my system (Threadripper 2950X 16-Core Processor + Samsung 500 GB SSD 850). Is the test recipe somewhere on the net to be found ? I could upload my results on this thread. I guess you used something like gnuplot to create the graphics for the tests?

Edit: nevermind, you already answered my question in your previous post  I’ll download the source and do some tests.

I’ll download the source and do some tests.

Yes that’s the same article I posted a link for five posts above

On SSDs those tests make little sense, since there is no overhead when seeking to chunks. They can be directly addressed.

In Ardour’s case the bottleneck is due to reading small parts of many files during playback, or due to writing many small chunks when recording. This is particularly bad for HDDs where the disk’s head needs to move to seek for each file access. SSDs do not have this issue.

Ok, thanks for the info. I’m interested in this subject so I’ll do some tests on spinning disks and a SSD anyway. Just got your tests working and can’t stop now

I wonder should I use the -M option (use mmap) to get results comparable on your old tests ? I’m doing these tests on Linux.

I guess the blocksizes on the run-readtest.sh commanline should be in bytes, not kilobytes ?

If results for ext4, ntfs and xfs should turned out be the same on a SSD, that’s a result too.

Yes, e.g. something like

./run-readtest.sh -f 10 -d /path/to/test/files/ 8192 16384 32768 65536 131072 262144 524288 1048576 2097152 4194304 8388608

I have two Seagate Ironwolf Pro 12 TB spinning disks. It seems I get insane results from both of them (the results do no reflect real drive seek / read speed). This might have something to do with the big 256 MB cache the drive has. Maybe the 10 MB testfile size is not big enough anymore to really test this drive. I guess there is no way to get comparable result to the 2015 test anymore with big modern drives. Or I might have done something wrong with my tests ?

Linux mika-pc 5.4.44-1-MANJARO #1 SMP PREEMPT Wed Jun 3 14:48:07 UTC 2020 x86_64 GNU/Linux

Filesystem xfs

Command: ./run-readtest.sh -f 10 -d testfiles 8192 16384 32768 65536 131072 262144 524288 1048576 2097152 4194304 8388608

# Building files for test...

# Blocksize 8192

# Min: 73.3084 MB/sec Avg: 3319.4074 MB/sec || Max: 0.014 sec

# Max Track count: 18128 @ 48000SPS

# Sus Track count: 400 @ 48000SPS

# seeks: 163840: bytes: 1342177280 total_time: 0.385611

8192 73.3084 3319.4074 0.0136 0.00037

# Blocksize 16384

# Min: 3003.0030 MB/sec Avg: 3877.4601 MB/sec || Max: 0.001 sec

# Max Track count: 21176 @ 48000SPS

# Sus Track count: 16400 @ 48000SPS

# seeks: 81920: bytes: 1342177280 total_time: 0.330113

16384 3003.0030 3877.4601 0.0007 0.00003

# Blocksize 32768

# Min: 3088.8031 MB/sec Avg: 4096.8915 MB/sec || Max: 0.001 sec

# Max Track count: 22374 @ 48000SPS

# Sus Track count: 16868 @ 48000SPS

# seeks: 40960: bytes: 1342177280 total_time: 0.312432

32768 3088.8031 4096.8915 0.0013 0.00006

# Blocksize 65536

# Min: 3357.1129 MB/sec Avg: 4160.3828 MB/sec || Max: 0.002 sec

# Max Track count: 22721 @ 48000SPS

# Sus Track count: 18334 @ 48000SPS

# seeks: 20480: bytes: 1342177280 total_time: 0.307664

65536 3357.1129 4160.3828 0.0024 0.00012

# Blocksize 131072

# Min: 3557.1365 MB/sec Avg: 4155.7092 MB/sec || Max: 0.004 sec

# Max Track count: 22695 @ 48000SPS

# Sus Track count: 19426 @ 48000SPS

# seeks: 10240: bytes: 1342177280 total_time: 0.308010

131072 3557.1365 4155.7092 0.0045 0.00023

# Blocksize 262144

# Min: 3337.1572 MB/sec Avg: 3643.1324 MB/sec || Max: 0.010 sec

# Max Track count: 19896 @ 48000SPS

# Sus Track count: 18225 @ 48000SPS

# seeks: 5120: bytes: 1342177280 total_time: 0.351346

262144 3337.1572 3643.1324 0.0096 0.00070

# Blocksize 524288

# Min: 2521.8693 MB/sec Avg: 2699.4481 MB/sec || Max: 0.025 sec

# Max Track count: 14742 @ 48000SPS

# Sus Track count: 13772 @ 48000SPS

# seeks: 2560: bytes: 1342177280 total_time: 0.474171

524288 2521.8693 2699.4481 0.0254 0.00322

# Blocksize 1048576

# Min: 1547.7069 MB/sec Avg: 1724.1194 MB/sec || Max: 0.083 sec

# Max Track count: 9415 @ 48000SPS

# Sus Track count: 8452 @ 48000SPS

# seeks: 1280: bytes: 1342177280 total_time: 0.742408

1048576 1547.7069 1724.1194 0.0827 0.01728

# Blocksize 2097152

# Min: 1019.3071 MB/sec Avg: 1172.6487 MB/sec || Max: 0.251 sec

# Max Track count: 6404 @ 48000SPS

# Sus Track count: 5566 @ 48000SPS

# seeks: 640: bytes: 1342177280 total_time: 1.091546

2097152 1019.3071 1172.6487 0.2512 0.06593

# Blocksize 4194304

# Min: 849.5148 MB/sec Avg: 851.6329 MB/sec || Max: 0.603 sec

# Max Track count: 4651 @ 48000SPS

# Sus Track count: 4639 @ 48000SPS

# seeks: 256: bytes: 1073741824 total_time: 1.202396

4194304 849.5148 851.6329 0.6027 0.00212

# Blocksize 8388608

# Min: 189.4343 MB/sec Avg: 189.4343 MB/sec || Max: 5.406 sec

# Max Track count: 1034 @ 48000SPS

# Sus Track count: 1034 @ 48000SPS

# seeks: 128: bytes: 1073741824 total_time: 5.405569

8388608 189.4343 189.4343 5.4056 0.00000I was mistaken. I can get sane results from my 12 TB disks and also on a SSD. It seems that disabling read cache does not work if there is unwritten data in the cache. So the run-readtest.sh only needed a sync - command before the final for - loop. The for - loop disables read caching with: echo 3 | /proc/sys/vm/drop_caches

I will make some kind of table for comparing ntfs, ext4 and xfs performance on a spinning and a ssd - disk.

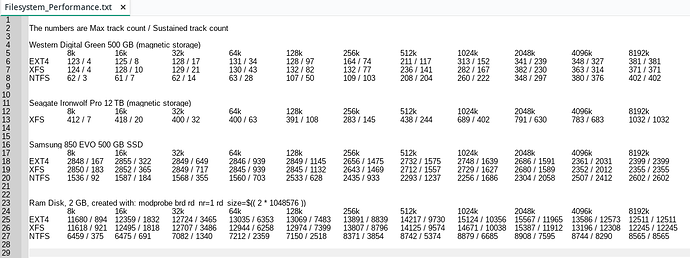

Ok I have some results for reading a spinning disk, a SSD disk and a Ram disk.

First of all these are very unscientific because I noticed that the results fluctuated a lot when a measurement was repeated. I should have done at least 10 repeats but I only did two. I’m also going to make some assumptions here and I hope someone who knows better corrects me if I get it wrong.

First of all a measurement looks like this. The disk cache is disabled between each test and the disk is read / seeked using block sizes: from 8 Kb to 8 MB.

# Building files for test...

# Blocksize 8192

# Min: 1.2768 MB/sec Avg: 75.7656 MB/sec || Max: 0.783 sec

# Max Track count: 413 @ 48000SPS

# Sus Track count: 6 @ 48000SPS

# seeks: 163840: bytes: 1342177280 total_time: 16.894210

8192 1.2768 75.7656 0.7832 0.05556

# Blocksize 16384

# Min: 2.6601 MB/sec Avg: 80.0134 MB/sec || Max: 0.752 sec

# Max Track count: 436 @ 48000SPS

# Sus Track count: 14 @ 48000SPS

# seeks: 81920: bytes: 1342177280 total_time: 15.997314

16384 2.6601 80.0134 0.7519 0.07237

# Blocksize 32768

# Min: 5.7018 MB/sec Avg: 76.2784 MB/sec || Max: 0.702 sec

# Max Track count: 416 @ 48000SPS

# Sus Track count: 31 @ 48000SPS

# seeks: 40960: bytes: 1342177280 total_time: 16.780642

32768 5.7018 76.2784 0.7015 0.09829

# Blocksize 65536

# Min: 8.9448 MB/sec Avg: 78.7388 MB/sec || Max: 0.894 sec

# Max Track count: 430 @ 48000SPS

# Sus Track count: 48 @ 48000SPS

# seeks: 20480: bytes: 1342177280 total_time: 16.256281

65536 8.9448 78.7388 0.8944 0.12506

# Blocksize 131072

# Min: 17.0888 MB/sec Avg: 73.0745 MB/sec || Max: 0.936 sec

# Max Track count: 399 @ 48000SPS

# Sus Track count: 93 @ 48000SPS

# seeks: 10240: bytes: 1342177280 total_time: 17.516379

131072 17.0888 73.0745 0.9363 0.09900

# Blocksize 262144

# Min: 25.2024 MB/sec Avg: 48.8645 MB/sec || Max: 1.270 sec

# Max Track count: 266 @ 48000SPS

# Sus Track count: 137 @ 48000SPS

# seeks: 5120: bytes: 1342177280 total_time: 26.194883

262144 25.2024 48.8645 1.2697 0.56400

# Blocksize 524288

# Min: 43.5731 MB/sec Avg: 78.4967 MB/sec || Max: 1.469 sec

# Max Track count: 428 @ 48000SPS

# Sus Track count: 237 @ 48000SPS

# seeks: 2560: bytes: 1342177280 total_time: 16.306410

524288 43.5731 78.4967 1.4688 0.61497

# Blocksize 1048576

# Min: 79.5081 MB/sec Avg: 138.2492 MB/sec || Max: 1.610 sec

# Max Track count: 755 @ 48000SPS

# Sus Track count: 434 @ 48000SPS

# seeks: 1280: bytes: 1342177280 total_time: 9.258640

1048576 79.5081 138.2492 1.6099 0.57738

# Blocksize 2097152

# Min: 113.5901 MB/sec Avg: 140.6556 MB/sec || Max: 2.254 sec

# Max Track count: 768 @ 48000SPS

# Sus Track count: 620 @ 48000SPS

# seeks: 640: bytes: 1342177280 total_time: 9.100242

2097152 113.5901 140.6556 2.2537 0.41770

# Blocksize 4194304

# Min: 117.9922 MB/sec Avg: 137.1262 MB/sec || Max: 4.339 sec

# Max Track count: 748 @ 48000SPS

# Sus Track count: 644 @ 48000SPS

# seeks: 256: bytes: 1073741824 total_time: 7.467572

4194304 117.9922 137.1262 4.3393 0.85628

# Blocksize 8388608

# Min: 190.0531 MB/sec Avg: 190.0531 MB/sec || Max: 5.388 sec

# Max Track count: 1037 @ 48000SPS

# Sus Track count: 1037 @ 48000SPS

# seeks: 128: bytes: 1073741824 total_time: 5.387968

8388608 190.0531 190.0531 5.3880 0.00000

I made a table of “Max Track count” and “Sus track count” values assuming they mean “maximimum theoretical track count” and “track count for sustained recording”. These values seem to correspond with the Minimum MB/sec and Average MB/sec measurement.

When staring at the results I realized that because the file cache is disabled before testing the measurement program probably tries to take a look at the worst case raw performance of the disk. We always have disk cache enabled while running Ardour so these track counts are only meaningful for showing the disk performance in a situation where the cache could not help us in any way for reading the disk.

Another thing that I noticed while looking at the results for the SSD and formatted Ram disk is that the track counts are crazy high. I’m not ever going to have a session with more than 300 tracks. I’ve seen a real world session with 150 tracks but 300+ becomes unmanageable, tracks should then be split into several sessions and stem mixes made. So the SSD and Ram disk measurements are only valid for showing if there is some significant overhead for using a filesystem. If there is the measurements will show lower values than for the other filesystems. NTFS was mounted with ntfs-3g and that uses fuse and that in turn has a performance penalty. This can be seen clearly on the Ram disk test. I don’t know if there is a method for mounting NTFS without fuse.

All tests were done on a 16 core Amd Threadripper 2950X, 64 GB Ram, Manjaro Linux with kernel: 5.4.44-1-MANJARO #1 SMP PREEMPT Wed Jun 3 14:48:07 UTC 2020 x86_64 GNU/Linux and the performance cpu governor turned on.

I tested an old Western Digital Caviar Green 500 GB spinning disk, a Samsung 500 GB SSD and a formatted Ram disk. I thought the ram disk test would be fun and it also might bring up differences in overhead for each filesystems and I think it did. The Ram disk can be though of as an ideal hard disk. I also did test a modern big Seagate Ironwolf Pro 12 TB spinning disk, but as these have data on them I could not reformat them to test other filesystems than XFS.

EXT4 seems to be the overall winner for a very thin margin over XFS. The difference is so small that I reformatted my EXT4 audio SSD to XFS.

Now the only thing I wonder does Ardour use other buffer sizes than 256KB when reading the disk ? It seems the most efficient buffer size for all these tests was 8 MB. Compared to 256 KB the 8 MB cache size doubled or tripled the track count on spinning disks. On a SSD it only increased the sustained track count.

Nice

It looks like it’s mostly independent and XFS vs ext4 is mostly noise. It’s interesting that NTFS performs better on disks (even SSD) with large block-sizes.

Preferences > Audio > Buffering. The default “Medium” preset reads in chunks of 256k samples and writes 128k samples at a time (with 4bytes/sample that’s 1MB, 512kB respectively).

You have to at least use twice of that as buffer (read one chunk, while playing back the other). So Ardour would have to allocate a rt-safe ringbuffer of at least 16MB per track. At 48k, float that would correspond to around 1½ minutes of audio.

One thing to be aware of regarding filesystem performance is that the performance can change dramatically in some cases as filesystems fill up.

Where I work, we use XFS for data storage specifically for that reason. It’s performance stays fairly flat and deterministic as storage device space gets depleted. Such is not the case for EXT4.