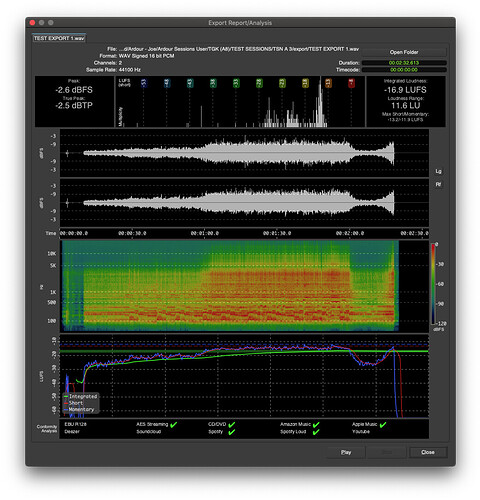

Ah, well that’s a whole different problem than just lowering the level of part of a track that’s too loud. The Loudness analyser really isn’t going to help you much with that, as it only shows the loudness of the combined, mixed track.

What you are talking about here is altering the mix which is about the relative level of each of the tracks. There’s no magic-bullet tool that can tell you the answer to this, as a lot of it is about artistic intent. But there’s also a lot of experience involved.

One useful tool is to use reference songs: songs from other artists in the genre that you like and think have a mix or “vibe” that you would like to emulate. Load one or two of these up in separate tracks in Ardour and use mute/solo to A/B them to your song, and try to consider where your mix differs.

Maybe not. But I would consider that to be an artistic decision too. In which case, I would suggest keeping each track as a separate song whilst you are editing/mixing them. If the genre is metal, then that is also drives the artistic intent when it comes to dynamic range and loudness.

If you were doing instrumental Jazz, you would probably take a different approach.

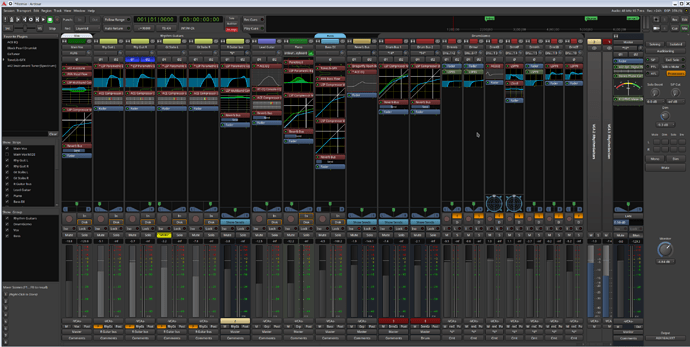

But it is important to consider that there are multiple steps in the production process which normally include “mixing” and “mastering” as separate steps.

Mixing is about getting the relative levels, EQ, stereo panning, and “space”, etc. of your song as you want it. This includes stuff like automation (e.g. to push the guitar louder during the solo), effects (reverb, etc.) and editing to fix things that you can’t easily re-record (e.g. cutting out background noise on the vocal mic when there’s no singing, or replacing part of the bass when a bad note was played.

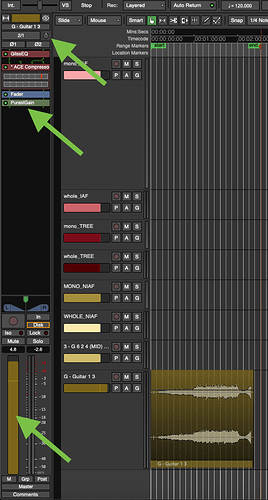

Mixing can, and usually does, include the application of EQ and compression on each track (or groups of related tracks). This is so fundamental that recording studio consoles have built-in EQ and compression on every channel strip, as well as faders for level and pan controls.

Mastering is about taking the finished mix and getting it to sound good on the target playback medium and environment. In the analogue days where media like tape and vinyl had significant compromises, mastering made sure that the audio worked well within the physical constraints of those media.

In the modern digital streaming world, the same thing applies, but digital media has far fewer constraints.

Mastering primarily involves application of EQ, gain, compression, and limiting to the finished mix, rather than to individual instrument tracks.

If your vocal is too loud, then you have to address that in the mixing stage, not when you are mastering.

I hope that’s somewhat helpful.

Cheers,

Keith