Yes, Ardour is non-destructive. Region gain cut/boost is just a fixed region gain factor applied on playback. You can also inspect and manually enter a value in Region > Properties > Region gain:

Would I be able to use a lua script to simultaneously increase gain boost while reducing track volume, thus effectively creating a vertical zoom?

Likely yes, but do keep in mind gain structure in your session. Increasing region gain will increase the level of any signal going into a pre-fader insert. This is why having a good gain structure is actually beneficial to start with as some plugins don’t handle particularly low signal level particularly well, but how much this affects you depends on your own individual workflow and plugins.

SeabladeAre these all in one track?

Have you thought of bouncing them to multiple tracks, so you can work on them individually?

Cheers,

Keith

They are separate audio files merged into a single track. Because these are different mic postions of the same recording I need to make sure any change made to one channel is applied to all other channels. Keeping them all in one track makes it simple.

@x42 So what’s the deal with vertical zoom, is it difficult/time consuming to implement or is it just a lack of interest from developers?

Neither. It is deliberate.

Can you explain why you want to look at a waveform? And what information you’d like to gain from that, or what operations you’ll want to perform? There are likely much more efficient ways to accomplish that instead of looking at a wave.

See also the following thread:

Do you have a reason not to use separate tracks in a group (with ‘Selection’ enabled)? https://manual.ardour.org/working-with-tracks/track-and-bus-groups/

I do this often with multi-mic recordings: having the different mic signals on separate tracks means it’s possible to mix them independently much more easily than if they’re all in the same track.

Neither. It is deliberate.

It’s not difficult and the developers are interested in it so you deliberately don’t implement it… ?

Vertical zoom is very useful when dealing with content that has a large dynamic range. For example some audio can be really quiet which makes it difficult to see details and make precise cuts.

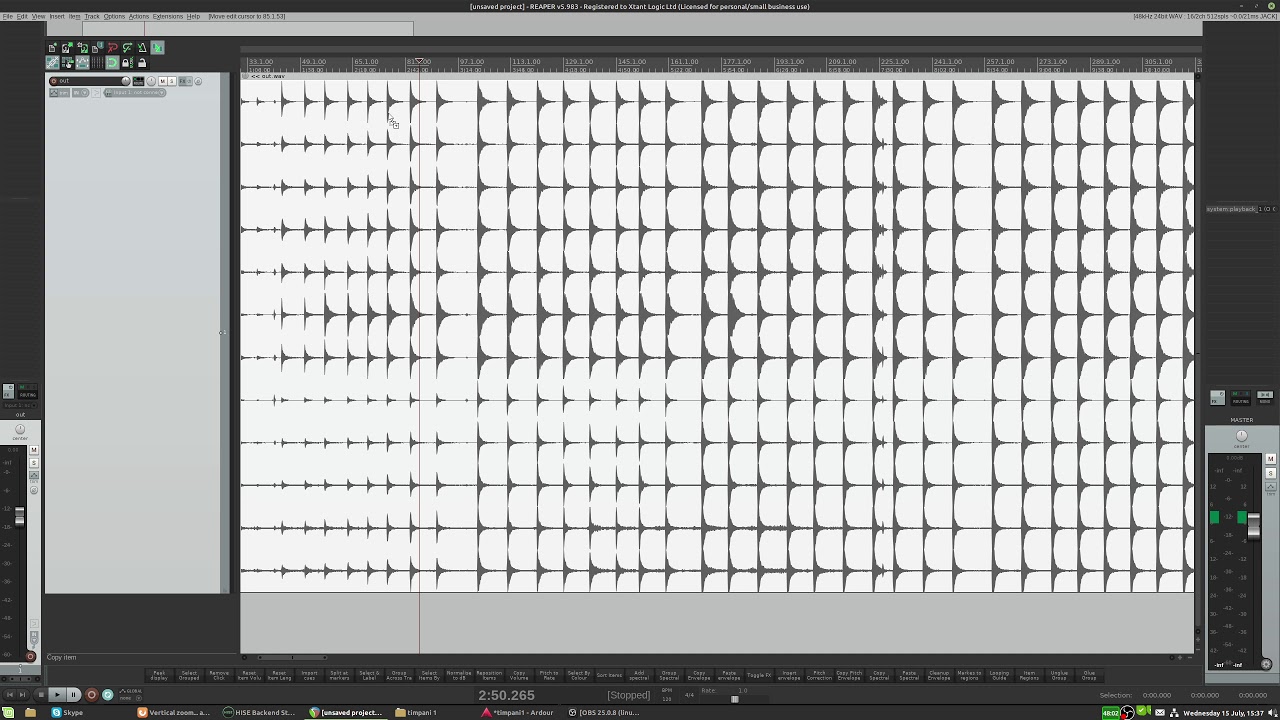

Here is a video demonstrating making a cut in Ardour and then again in Reaper (with vertical zoom). You can see instantly that it is much easier in Reaper because I can see what I’m doing. Now if this was a one off kind of thing it’s not too bad to roughly cut the audio, boost the gain, then make a more precise cut and reduce the gain again, but doing this hundreds of times is not practical.

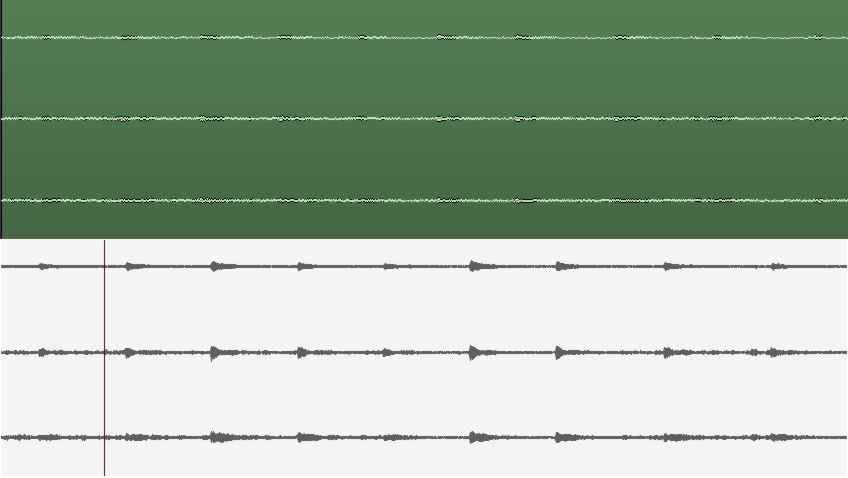

Here you can see a section of audio from just 3 of the channels. In Ardour (top image) I’m in logarithmic view and the waveform looks like noise. In Reaper (bottom image, with vertical zoom) you can see more detail and see that there is some audio there as well as noise.

My primary use is cutting samples. Mixing is not so important (although I use busses when needed). Only having to deal with one track makes my life much easier, I can cut and move hundreds of regions really quickly and I can apply batch scripts which work more quickly on a single track than multiple. But the main reason why it is necessary for me to use a single track is automation. I need to apply pitch shifting (and sometimes other automation) to each channel equally and this can only be done on a single track. It would be far too laborious to copy the automation of thousands of regions between 12 tracks and make sure any future edits are in sync.

Why not just jump to onsets or transients, that is more precise and also more efficient than doing it manually visually, isn’t it?

I use an onset detector too, but they are not 100% accurate, especially with quiet signals. With some samples (guitars for example) it’s often desirable to cut before the transient to get the noise of the pick as it hits the string but before it plucks it. There are similar situations with other instruments, like catching the breath of the player before a woodwind or brass note. The decision on where to make these more creative cuts is not really suitable for an onset/transient detector.

I’m sure there are other use cases for vertical zoom (otherwise there wouldn’t be so many feature requests for it) but my particular use case I admit is rather niche.

summary:

vertical zoom lets me see details more clearly without hearing them any more clearly; gain control forces me to see and hear things with more contrast

correct?

but also, if you’re literally making samples, wouldn’t you want to normalize everything anyway?

Yes. I don’t listen to the samples much during the initial cutting procedure, the listening comes later in the process. I’m dealing with several hours or recordings (8 for my current project) and listening to the whole thing again and again is a waste of time when I can quickly pin point areas of interest by looking at them. For example, if I see a big area of silence or a sudden spike in the waveform I can zoom in and check it out without having to listen to the whole recording.

For a lot of tasks looking at the waveform is faster than discovering it by listening. Even when it comes to the listening part if I find a problem and it’s a tricky thing to hear exactly which part of the audio has the issue I might open it in Audacity’s spectral view so I can see what I’m working with in even more detail.

Yes. The normalization is the final step (and Ardour even allows me to normalize on export which is great!). After the samples are cut, tuned, edited, etc. they are normalized individually. And then in the sampler a controllable and realistic gain curve can be added in to restore the relative gain between dynamic levels.

Even if I were to normalize the region before I cut the samples the quiet stuff will still be pretty quiet and the waveform difficult to see because the loud parts are already much louder. My recordings often have a huge dynamic range.

my imagined workflow here: normalize entire region, make rough cuts, normalize each region, trim region bounds.

is that actually notably slower than what you would do in reaper?

I’m not sure, I will test it and report back

The normalization of the whole file is not so useful because of the aforementioned dynamic range. However I just played around with the gain boost/cut and pushing this so that the loudest peaks go beyond 0db allows me to see the quietest peaks really well and effectively gives me the same view I would get from vertical zoom. The missing piece was I needed to go back to linear waveform view because in logarithmic view the noise is too prominent. I’ll explore making a lua script to drop the track’s gain as the region is boosted so that I can play back if needed without destroying my ears.

One advantage/disadvantage (depending on situation) to this approach is that the effective zoom of each region is independent, whereas with “regular” vertical zoom all regions would be zoomed equally.

In my view, this would be problematic as a workflow as would be @paul’s normalizing idea. You shouldn’t need to mess to with volume in order to adjust the waveform zoom. For a classical live concert, normalizing each individual piece isn’t an option as it all should have the same room tone level. Also, when I’m cutting I’m always wanting to quickly listen back to double-check I’ve not cut in the wrong place. Ear damage/clipping distortion are big no-nos  I think the answer is still a proper waveform zoom feature. Samplitude/Sequoia, Reaper, Studio One, Pyramix, Cubase, Logic Pro X etc all have it.

I think the answer is still a proper waveform zoom feature. Samplitude/Sequoia, Reaper, Studio One, Pyramix, Cubase, Logic Pro X etc all have it.

I agree 100%, especially since it’s apparently not difficult to implement.

read back, I did not say that. I said it’s a deliberate choice.

The reason why it’s not present is has nothing to do whether it’s complicated to implement it. I’d actually expect it to be time-consuming to implement it right.

I deliberately reject it because waveform is is not a true representation of the signal (really it should be a lollipop graph, or up-sampled) and operations should not depend on a user interpreting it. Rather tools should be added to facilitate workflow that users currently use a waveview as work-around for.

If someone would add this feature, many follow-up issue will also have to be addressed. e.g. There would need to be clear indication about the y-axis range of every track. Also recording must reset the scale to clearly indicate clipping, etc.

While I’m in the camp that has not had any issues with log view for all my classical cutting, I have seen enough examples to suggest that something is needed. The question for me is not of “true” representation but a visual zoom, however unscientific to assist in cutting. The feature is in enough of the mainstream DAWs for me to consider it a standard (a little like others think of the vertical MIDI velocity “sticks”). I can speak of my experiences in Sequoia…When the waves were too tiny, I increased zoom via a shortcut and once all cutting was done in the problem area, I would just press another shortcut to reset the zoom. Default should obviously be 100% but I was never confused about y-axis range as if zoom was engaged everything looked stretched and it was obvious. For recording, I’m definitely looking at meters, not the waveform but that might just be me.

Why not add a lollipop graph? I don’t mind the tool as long as it makes the workflow easier.